Jacek Gondzio (University of Edinburgh)

I shall address recent challenges in optimization, including problems which originate from the “Big Data” buzz. The existing and well-understood methods (such as interior point algorithms) which are able to take advantage of parallelism in the matrix operations will be briefly discussed. The new class of methods which work in the matrix-free regime will then be introduced. These new approaches employ iterative techniques such as Krylov subspace methods to solve the linear equation systems when search directions are computed. Finally, I shall comment on the well-known drawbacks of the fashionable but unreliable first-order methods for optimization and I shall suggest the parallelisation-friendly remedies which rely on the use of (inexact) second-order information.

Matthew Knepley (University of Chicago)

A common pitfall in large, complex simulations is a failure of convergence in the nonlinear solver, typically Newton's method or partial linearization with Picard's method. We present a framework for nonlinear preconditioning, based upon the composition of nonlinear solvers. We can define both left and right variants of the preconditioning operation, in analogy to the linear case. Although we currently lack a convincing convergence theory, we will present a powerful and composable interface for these algorithms, as well as examples which demonstrate their effectiveness.

Dave A. May (ETH Zurich), Jed Brown (Argonne National Laboratory), Laetitia Le Pourhiet (UPMC)

Complex multi-scale interactions characterize the dynamics of the Earth. Resolving three-dimensional structures and multi-physics processes over a wide range of spatio-temporal scales is a unifying grand challenge common to all branches of Earth science and one which must be addressed in order to achieve a comprehensive understanding of the Earth and other planets.

Numerical modeling has become an important technique to develop our understanding of the Earth as a coupled dynamical system - linking processes in the core, mantle, lithosphere, topography and the atmosphere. Computational geodynamics focuses on modeling the evolution of rocks over million year time spans. Such long-term evolution of rocks is governed via the equations describing the dynamics of very viscous, creeping flow (i.e. Stokes equations). The constitutive behavior of rocks over these time scales can be described as a visco-elasto-plastic material. Due to the strong temperature dependence and the presence of brittle fractures, the resulting effective non-linear viscosity can possess enormous variations in magnitude ( Pa s), which may occur over a wide range of length scales ranging from meters to kilometers.

Pa s), which may occur over a wide range of length scales ranging from meters to kilometers.

Of critical importance in performing 3D geodynamic simulations with sufficient rheological complexity is the preconditioner applied to the variable viscosity Stokes operator. The efficiency and robustness of this preconditioner ultimately controls the scientific throughput achievable and represents the largest computational bottleneck in all geodynamic software.

In this presentation, I outline a flexible Stokes preconditioning strategy which has been developed within pTatin3d – a finite element library specifically developed to study the long term evolution of the mantle-lithosphere.

We have made a number of fundamental design choices which result in a fast, highly scalable and robust Q2P1 finite element implementation which is suitable for solving a wide range of geodynamic applications. Specifically these choices include: (i) utilizing an inf-sup stable mixed finite element (with a unmapped pressure space) which provides a reliable velocity and pressure solution; (ii) expressing the problem in defect correction form so that Newton-like methods can be exploited; (iii) making extensive use of matrix- free operators that exploit local tensor products to drastically reduce the memory requirements, improve performance by 5-10x to attain 30% of Haswell FPU peak using AVX/FMA intrinsics, and improve on-node scalability of the sparse matrix-vector product; (iv) deferring a wide range of choices associated with the solver configuration to run-time.

The performance of the hybrid preconditioning strategy will be presented by examining the robustness of the preconditioner with respect to the topological structure and spatial variations within the viscosity field, together with its parallel scalability. In particular we will highlight the benefits of using hybrid coarse grid hierarchies, consisting of a mixture of Galerkin, assembled and matrix-free operators. Lastly, examples of 3D continental rifting will be presented to demonstrate the efficiency of the new methodology.

Mark Adams (Lawrence Berkeley National Laboratory), Matt Knepley (University of Chicago), Jed Brown (Argonne National Laboratory)

We discuss the use of segmental refinement (SR) to optimize the performance of multigrid on modern architectures by requiring minimal communication, loop fusion, and asynchronous processing, with minimal additional work with textbook efficient multigrid algorithms. We begin by continuing the work of Brandt and Diskin (1994) by investigating the robustness and quantitative work costs of SR and consider extensions of the techniques to multi-scale analysis methods.

Oliver Rheinbach (Freiberg University of Mining and Technology), Axel Klawonn (University of Cologne), Martin Lanser (University of Cologne)

The solution of nonlinear problems requires fast and scalable parallel solvers. FETI-DP (Finite Element Tearing and Interconnecting) domain decomposition methods are well known parallel solution methods for problems from partial differential equations. Recently, different nonlinear versions of the well-known FETI-DP methods for linear problems have been introduced. In these methods the nonlinear problem is decomposed before linearization. This can be viewed as a strategy to localize computational work and extend scalability in the spirit of, e.g., the ASPIN approach. We show preliminary parallel scalability results for up to 262144 processor cores on the Mira BlueGene/Q supercomputer at Argonne National Laboratory.

Johannes Steiner (Università della Svizzera italiana), Rolf Krause (Università della Svizzera italiana )

For the simulation of structural mechanics the finite element method is one of the most used, if not the most popular discretization method. In fluid dynamics the finite volume method is a widely used multi-purpose method. Therefore the coupling of finite element and finite volume methods for Fluid-Structure-Interaction is of substantial interest. We present the coupling of these two different discretization methods for Fluid-Structure-Interaction using a monolithic approach. Typically for the coupling of two different discretization methods a partitioned coupling scheme is used, where the two sub-problems are handled separately with some exchange of data at the interface. In a monolithic formulation however, the transfer of forces and displacements is fulfilled in a common set of equations for fluid and structure, which is solved simultaneously. For complex simulation domains, we end up in algebraic systems with a large number of degrees of freedom, which makes the use of parallel solvers mandatory. Here, a good choice for an efficient preconditioning strategy is important. Our solver strategies are based on the application of Newton’s method using iterative solvers within different multi-level methods. The multi-level methods are constructed on a nested hierarchy of unstructured nested meshes. We discuss both, the coupling approach of the two different discretizations as well as the efficient solution of the arising large nonlinear system.

Simone Deparis (EPFL), Gwenol Grandperrin (EPFL), Radu Popescu (EPFL), Alfio Quarteroni (EPFL)

We present two strategies for preconditioning unsteady Navier-Stokes equations discretised by the finite element method suited for parallel computing. The first one relies on an inexact block factorisation of the algebraic system, like SIMPLE, Pressure-Correction Diffusion (PCD), or Yosida, and on the approximation of the blocks by a 2-level Schwarz preconditioner or a multigrid one. We compare the different choices — SIMPLE, PCD, and Yosida — with respect to robustness and scalability. The parallelism is achieved by partitioning the finite element mesh into the same number as the available processes. With the same ideas, we develop preconditioners for fluid-structure interaction in hemodynamics and present the scalability of the new defined algorithms. The second strategy is based on Schwarz type preconditioners and on assigning a fixed small amount of processes per partition. On each subdomain, the exact or inexact resolution of the local problem is solved in parallel by either a parallel direct method or an iterative one. We present the results on cases relevant to vascular flow simulations using Trilinos and the LifeV finite element libraries.

Matthias Mayr (Technische Universität München), Michael W. Gee (Technische Universität München)

The numerical analysis of fluid-structure interaction problems has been a field of intensive research for years and plays an important role in engineering applications of all kind. Especially, the coupling of incompressible fluid flow and deformable structures undergoing large deformations is of particular interest. When it comes to real world applications, it is crucial to make predictions about the physical problem at hand very accurately, but still efficiently and with reasonable costs. With respect to temporal discretization, one has to take care of certain aspects in order to obtain an accurate, consistent and efficient scheme.

When using different time integration schemes in the fluid and structure field as done in most applications, it is not guaranteed that the equilibrium in both fields is evaluated at exactly the same time instance. Hence, the coupling tractions that are exchanged at the interface do not match in time leading to a temporal inconsistency among the fields. We have incorporated temporal interpolations of the interface tractions in our monolithic solver and, thus, allow for an independent and field-specific choice of time integration schemes in fluid and structure field in a consistent manner [Mayr et al., SIAM J. Sci. Comput., 2014 (submitted)].

When looking at accuracy and using constant time step sizes  in all time steps, a high level of temporal accuracy can only be achieved by choosing overall small time step sizes. However, this leads to a tremendous increase in computational time, especially when such small time step sizes are not necessary throughout the entire simulation in order to maintain the desired level of accuracy. By choosing the time step sizes

in all time steps, a high level of temporal accuracy can only be achieved by choosing overall small time step sizes. However, this leads to a tremendous increase in computational time, especially when such small time step sizes are not necessary throughout the entire simulation in order to maintain the desired level of accuracy. By choosing the time step sizes  adaptively and individually for each time step

adaptively and individually for each time step  , the local discretization error of the applied time integration scheme can be limited to a user-given bound and, thus, one can achieve the desired level of accuracy by simultaneously limiting the computational costs. Therefore, we combine established error estimation procedures in the structure and fluid field (see e.g. [Zienkiewicz et al., Earthquake Engng. Struct. Dyn. (20), pp. 871-887, 1991], [Gresho et al., SIAM J. Sci. Comput. (30), pp. 2018-s2054, 2008], [Kay et al., SIAM J. Sci. Comput. (32), pp. 111-128, 2010]) to build an adaptive time stepping algorithm for fluid-structure interaction problems. This algorithm is integrated into the monolithic solver framework proposed in [Mayr et al., 2014]. By controlling the local temporal discretization error in the structure and fluid field, an optimal time step size can be selected that satisfies the accuracy demands in both fields.

, the local discretization error of the applied time integration scheme can be limited to a user-given bound and, thus, one can achieve the desired level of accuracy by simultaneously limiting the computational costs. Therefore, we combine established error estimation procedures in the structure and fluid field (see e.g. [Zienkiewicz et al., Earthquake Engng. Struct. Dyn. (20), pp. 871-887, 1991], [Gresho et al., SIAM J. Sci. Comput. (30), pp. 2018-s2054, 2008], [Kay et al., SIAM J. Sci. Comput. (32), pp. 111-128, 2010]) to build an adaptive time stepping algorithm for fluid-structure interaction problems. This algorithm is integrated into the monolithic solver framework proposed in [Mayr et al., 2014]. By controlling the local temporal discretization error in the structure and fluid field, an optimal time step size can be selected that satisfies the accuracy demands in both fields.

In the presentation, the proposed time stepping scheme for the monolithic fluid-structure interaction solver will be given and examples will be provided, that demonstrate the behaviour of the newly proposed method with respect to accuracy and computational costs.

Thomas Richter (Heidelberg University), Stefan Frei (Heidelberg University)

We present a model for fluid-structure interactions that is completely based on Eulerian coordinate systems for fluid and structure. This model is strictly monolithic and allows for fully coupled and implicit discretization and solution techniques. Without the need for artificial transformation of domains, the Fully Eulerian model can deal with very large deformations and also contact. For capturing the interface between solid- and fluid-domain we present the Initial Point Set, an auxiliary function that allows to relate the structural stresses to the reference configuration. Similar to front-capturing techniques for multiphase-flows, the great computational challenge is the accurate discretization close to the interface. Here, we introduce a novel locally modified finite element method, that resolves the interface by a special parametric setup of the basis functions. We can show optimal order convergence and also a robust condition number independent of the interface location. Further, and in contrast to established interface-resolving methods like XFEM, the number of unknowns and the algebraic structure of the system matrix also does not depend on the interface location. This helps to design efficient solvers for problems with moving interfaces.

Michael A. Heroux (Sandia National Laboratories)

Extreme-scale computers present numerous challenges to linear solvers. Two major challenges are resilience in the presence of process loss and effective scaling of robustly preconditioned iterative methods on high bandwidth, high latency systems. In this presentation, we give an overview of several resilient computing models and their use with preconditioned iterative methods. These models enable effective iterative solutions in the presence of large variability in collectives performance, process loss and silent data corruption. We also present a new framework for effectively using traditional additive Schwarz preconditioners with communication-avoiding iterative methods, addressing one of the critical shortcomings to widespread use of these approaches.

Erin Carson (University of California, Berkeley), Sam Williams (Lawrence Berkeley National Lab), Michael Lijewski (Lawrence Berkeley National Lab), Nicholas Knight (University of California, Berkeley), Ann S. Almgren (Lawrence Berkeley National Lab), James Demmel (University of California, Berkeley)

Many large-scale numerical simulations in a wide variety of scientific disciplines use the geometric multigrid method to solve elliptic and/or parabolic partial differential equations. When applied to a hierarchy of adaptively-refined meshes, geometric multigrid often reaches a point where further coarsening of the grid becomes impractical as individual subdomain sizes approach unity. At this point, a common solution in practice is to terminate coarsening at some level and use a Krylov subspace method, such as BICGSTAB, to reduce the residual by a fixed factor. This is called a `bottom solve'.

Each iteration of BICGSTAB requires multiple global reductions (MPI collectives), which involve communication between all processors. Because the number of BICGSTAB iterations required for convergence grows with problem size, and the time for each collective operation increases with machine scale, bottom solves can constitute a significant fraction of the overall multigrid solve time in large-scale applications.

In order to reduce this performance bottleneck, we have implemented, evaluated, and optimized a communication-avoiding  -step formulation of BICGSTAB (CA-BICGSTAB for short) as a high performance, distributed-memory bottom solver for geometric multigrid. This is the first time an

-step formulation of BICGSTAB (CA-BICGSTAB for short) as a high performance, distributed-memory bottom solver for geometric multigrid. This is the first time an  -step Krylov subspace method has been leveraged to improve multigrid bottom solver performance. The CA-BICGSTAB method is equivalent in exact arithmetic to the classical BICGSTAB method, but performs

-step Krylov subspace method has been leveraged to improve multigrid bottom solver performance. The CA-BICGSTAB method is equivalent in exact arithmetic to the classical BICGSTAB method, but performs  fewer global reductions over a fixed number of iterations at the cost of a constant factor of additional floating point operations and peer-to-peer messages. Furthermore, while increasing

fewer global reductions over a fixed number of iterations at the cost of a constant factor of additional floating point operations and peer-to-peer messages. Furthermore, while increasing  reduces the number of global collectives over a fixed number of iterations, it can also slow down convergence, requiring a greater number of total iterations to reduce the residual by the same factor. These tradeoffs between bandwidth, latency, and computation and between time per iteration and total iterations create an interesting design space to explore.

reduces the number of global collectives over a fixed number of iterations, it can also slow down convergence, requiring a greater number of total iterations to reduce the residual by the same factor. These tradeoffs between bandwidth, latency, and computation and between time per iteration and total iterations create an interesting design space to explore.

We used a synthetic benchmark to perform detailed performance analysis and demonstrate the benefits of CA-BICGSTAB. The best implementation was integrated into BoxLib, an AMR framework, in order to evaluate the benefits of the CA-BICGSTAB bottom solver in two applications: LMC, a low-Mach number combustion code, and Nyx, used for cosmological simulations. Using up to 32,768 cores on the Cray XE6 at NERSC, we obtained bottom solver speedups of up to  on synthetic problems and up to

on synthetic problems and up to  in real applications with parameter

in real applications with parameter  . This improvement resulted in up to a

. This improvement resulted in up to a  speedup in the overall geometric multigrid solve in real applications.

speedup in the overall geometric multigrid solve in real applications.

Piyush Sao (Georgia Institute of Technology), Richard Vuduc (Georgia Institute of Technology)

We show how to use the idea of self-stabilization, which originates in work by Dijkstra (1974) for problems in distributed control, to make fault-tolerant iterative solvers. At a high level, a self-stabilizing system is one that, starting from an arbitrary state (valid or invalid), reaches a valid state within a finite number of steps. This property imbues the system with a natural means of tolerating soft faults.

Our basic recipe for applying self-stabilization to numerical algorithm design is roughly as follows. First, we regard the execution of a given solver algorithm as the “system”. Its state consists of some or all of the variables that would be needed to continue its execution. Then, we identify a condition that must hold at each iteration for the solver to converge. When the condition holds, the solver is in a “valid” state; otherwise, it is in an invalid state. Finally, we augment the solver algorithm with an explicit mechanism that will bring it from an invalid state to a valid one within a guaranteed finite number of iterations. Importantly, the mechanism is designed so that the solver algorithm need not detect a fault explicitly. Rather, it is designed to cause a transition from an invalid to valid state automatically.

We have thus far devised two proof-of-concept self-stabilizing iterative linear solvers. One is a self-stabilized variant of steepest descent (SD) and the other is a variant of the conjugate gradient (CG) method. Our self-stabilized versions of SD and CG do require small amounts of fault-detection, e.g., we may check only for NaNs and infinities. Otherwise, under specific assumptions on the type and rates of transient soft faults, we can show formally that our modified solvers are self-stabilizing.

To get an idea of how this process works, consider the case of CG. Recall that CG constructs A-orthogonal search directions that span a Krylov subspace. In each iteration, it updates the solution estimate by taking a step of optimal size in the computed search direction. A fault, when it occurs, can

Our self-stabilized CG, through a periodic correction scheme we have devised, enforces the correctness of the problem being solved and restores certain local optimality and orthogonality properties of the generated search directions and residuals. One can prove that maintaining these properties satisfies the Zoutendijk Condition, which allows the (modified) solver to converge asymptotically, even in the presence in faults, provided the correction mechanism is invoked “frequently enough.” And in the absence of any faults, our self-stabilized CG remains equivalent to CG up to roundoff error.

To illustrate the fault tolerance property of self-stabilized algorithms, we test our self-stabilized CG experimentally by analyzing its convergence and overhead for different types and rates of faults in selective reliability mode, where we assume a small fraction of computation can be done in guaranteed reliable mode. We present a theoretical analysis relating amount of reliable computation required, rate of faults and problem being solved

Beyond the specific findings for the case of self-stabilized CG we believe self-stabilization has promise to become a useful tool for constructing resilient solvers more generally.

James Elliott (Sandia National Laboratories), Mark Hoemmen (Sandia National Laboratories ), Frank Mueller (North Carolina State University)

Much resilience research has focused on tolerating parallel process failures, either by improving checkpoint / restart performance, or introducing process replication. This matters because large-scale computers experience frequent single-process failures. However, it excludes the increasing likelihood of hardware anomalies which do not cause process failure. Even one incorrect arithmetic operation or memory corruption may cause incorrect results or increase run time, with no notification from the system. We refer to this class of faults as silent data corruption (SDC). Increasing parallelism and decreasing transistor feature sizes will make it harder to catch and correct SDC in hardware. Hardware detection and correction increase power from double-digit percentages to over twice as much. The largest parallel computers already have tight power limits, which will only get tighter. Thus, future extreme-scale systems may expose some SDC to applications. This will shift some burden to algorithms: either to detect and correct SDC, or absorb its effects and get the correct solution regardless.

This motivates our work on understanding how iterative linear solvers and preconditioners behave given SDC, and improving their resilience. We have yet to see a need for developing entirely new numerical methods. Rather, we find that hardening existing algorithms by careful introduction of more robust numerical techniques and invariant checks goes a long way. We advocate combining numerical analysis and systems approaches to do so.

Understanding algorithms' behavior in the presence of SDC calls for a new approach to fault models and experiments. Most SDC injection experiments randomly flip memory bits in running applications. However, this only shows average-case behavior for a particular subset of faults, based on an artificial hardware model. Algorithm developers need to understand worst-case behavior, and reason at an algorithmic level with the higher-level data types they actually use. Also, we know so little about how SDC may manifest in future hardware, that it seems premature to draw conclusions about the average case.

We argue instead that numerical algorithms can benefit from a numerical unreliability fault model, where faults manifest as unbounded perturbations to floating-point data. This favors targeted experiments that inject one or few faults in key parts of an algorithm, giving insight into the algorithm's response. It lets us extend lessons learned in rounding error analysis to the unbounded fault case. Furthermore, it leads to a resilience approach we call the skeptical programmer. Inexpensive checks of algorithm-specific invariants can exclude large errors in intermediate quantities. This bounds any remaining errors, making it easier to converge through them. While this requires algorithm-specific analysis, it identifies algorithms that naturally keep intermediate quantities bounded. These invariants also help detect programmer error. A healthy skepticism about subroutines' correctness makes sense even with perfectly reliable hardware, especially since modern applications may have millions of lines of code and rely on many third-party libraries.

Skepticism about intermediate results does not suffice for correctness despite SDC. This calls for a selective reliability programming model that lets us choose parts of an algorithm that we insist be correct. We combine selective reliability and skepticism about intermediate results to make iterative linear solvers reliable despite unbounded faults. This relies on an inner-outer solver approach, which does most of its work in the unreliable inner solver, that in turn preconditions the reliable outer solver. Using a flexible Krylov method for the latter absorbs inner faults. Our experiments illustrate this approach in parallel with different kinds of preconditioners in inner solves.

Jed Brown (Argonne National Laboratory), Mark Adams (Lawrence Berkeley National Laboratory), Dave May (ETH Zurich), Matthew Knepley (University of Chicago)

An interpretation of the full approximation scheme (FAS) multigrid method identifies a " -correction" that represents the incremental influence of the fine grid on the coarse grid. This influence is robust to long-wavelength perturbations to the problem, allowing reuse between successive nonlinear iterations, time steps, or solves. For non-smooth problems such as brittle failure and contact, this permits fine grids to be skipped in regions away from the active nonlinearity. This technique provides benefits similar to adaptive mesh refinement, but is more general because the problem can have arbitrary fine-scale microstructure. We introduce the new method and present numerical results in the context of long-term lithosphere applications.

-correction" that represents the incremental influence of the fine grid on the coarse grid. This influence is robust to long-wavelength perturbations to the problem, allowing reuse between successive nonlinear iterations, time steps, or solves. For non-smooth problems such as brittle failure and contact, this permits fine grids to be skipped in regions away from the active nonlinearity. This technique provides benefits similar to adaptive mesh refinement, but is more general because the problem can have arbitrary fine-scale microstructure. We introduce the new method and present numerical results in the context of long-term lithosphere applications.

Jack Poulson (Georgia Institute of Technology), Greg Henry

Evaluating the norm of a resolvent over a window in the complex plane provides an illuminating generalization of a scatter-plot of eigenvalues and is of obvious interest for analyzing preconditioners. Unfortunately the common perception is that such a computation is embarrassingly parallel, and so little effort has been expended towards reproducing the functionality of the premier tool for pseudospectral computation, EigTool (Wright et al.), for distributed-memory architectures in order to enable the analysis of matrices too large for workstations.

This talk introduces several high-performance variations of Lui's triangularization followed by inverse iteration approach which involve parallel reduction to (quasi-)triangular or Hessenberg form followed by interleaved Implicitly Restarted Arnoldi iterations driven by multi-shift (quasi-)triangular or Hessenberg solves with many right-hand sides. Since multi-shift (quasi-)triangular solves can achieve a very high percentage of peak performance on both sequential and parallel architectures, such an approach both improves the efficiency of sequential pseudospectral computations and provides a high-performance distributed-memory scheme. Results from recent implementations within Elemental (P. et al.) will be presented for a variety of large dense matrices and practical convergence-monitoring schemes will be discussed.

Frederic Legoll (ENPC)

The Multiscale Finite Element Method (MsFEM) is a popular Finite Element type approximation method for multiscale elliptic PDEs. In contrast to standard finite element methods, the basis functions that are used to generate the MsFEM approximation space are specifically adapted to the problem at hand. They are precomputed solutions (in an offline stage) to some appropriate local problems, set on coarse finite elements. Next, the online stage consists in using the space generated by these basis functions (the dimension of which is limited) to build a Galerkin approximation of the problem of interest.

Many tracks, each of them leading to a specific variant of the MsFEM approach, have been proposed in the literature to precisely define these basis functions. In this work, we introduce a specific variant, inspired by the classical work of Crouzeix and Raviart, where the continuity of the solution across the edges of the mesh is enforced only in a weak sense. This variant is particularly interesting for problems set on perforated media, where inclusions are not periodically located. The accuracy of the numerical solution is then extremely sensitive to the boundary conditions set on the MsFEM basis functions. The Crouzeix-Raviart type approach we introduce proves to be very flexible and efficient.

Time permitting, we will also discuss a shape optimization problem for multiscale materials, which also leads to expensive offline computations in order to make the online optimization procedure affordable.

Jakub Šístek (Academy of Sciences of the Czech Republic), Bedřich Sousedík (University of Southern California), Jan Mandel (University of Colorado Denver)

In the first part of the talk, components of the Balancing Domain Decomposition based on Constraints (BDDC) method will be recalled. The method solves large sparse systems of linear equations resulting from discretisation of PDEs. The problem on the interface among subdomains is solved by the preconditioned conjugate gradient method, while one step of BDDC serves as the preconditioner.

In the second part of the talk, the algorithm of Adaptive-Multilevel BDDC will be described. It is based on a combination of two extensions of the standard BDDC method: The Adaptive BDDC, which aims at problems with severe numerical difficulties, generates constraints based on prior analysis of the problem and eliminates the worst modes from the space of admissible functions. Generalised eigenvalue problems are solved at each face between two subdomains, and the eigenvectors corresponding to a few largest eigenvalues are used for construction of the coarse space. The Multilevel BDDC is a recursive application of the BDDC method to successive coarse problems aiming at very large problems solved using thousands of compute cores. Finally, the parallel implementation of these concepts within our open-source BDDCML package will be presented. Performance of the solver is analysed on several benchmark and engineering problems, and main issues with an efficient implementation of both Adaptive and Multilevel extensions will be discussed.

Sanja Singer (University of Zagreb), Vedran Novakovic (University of Zagreb), Sasa Singer (University of Zagreb),

The generalized eigenvalue problem is of significant practical importance, especially in structural engineering where it arises as the vibration and buckling problems.

The Falk–Langemeyer method could be a method of choice for parallel solving of a definite generalized eigenvalue problem  , where

, where  and

and  are Hermitian, and the pair is definite, i.e., there exist constants

are Hermitian, and the pair is definite, i.e., there exist constants  and

and  such that

such that  is positive definite.

is positive definite.

In addition, if both  and

and  are positive definite, after a two-sided scaling with a diagonal matrix

are positive definite, after a two-sided scaling with a diagonal matrix  (this step is not necessary) and the Cholesky factorization of

(this step is not necessary) and the Cholesky factorization of  and

and  , the problem can be seen as the computation of the generalized singular value decomposition SVD of the Cholesky factors

, the problem can be seen as the computation of the generalized singular value decomposition SVD of the Cholesky factors  and

and  .

.

Here we present how to parallelize the Falk–Langemeyer method, by using a technique similar as in the parallelization of the one-sided Jacobi method for the SVD of a single matrix. By appropriate blocking of the method, an almost ideal load balancing between all available processors is obtained. A similar blocking technique, now on a processor level, can be used to exploit local cache memory to further speed up the process.

Neven Krajina (University of Zagreb)

In his dissertation, Pietzsch described a one-sided algorithm for diagonalization of skew-symmetric matrices which calculates the eigenvalues to high relative accuracy by using only real arithmetic. While there are algorithms for the same purpose, like QR method of Ward and Gray or Jacobi-like method of Paardekooper, they compute the eigenvalues of a skew-symmetric matrix  up to an absolute error bound of

up to an absolute error bound of  , where

, where  is some slowly growing function of the matrix order

is some slowly growing function of the matrix order  and

and  is the machine precision. Thus, eigenvalues of larger modulus are computed with high relative accuracy, while the smaller ones may not be relatively accurate at all. On the other hand, we can also reduce our matrix to tridiagonal skew-symmetric matrix and compute the eigenvalues by the bidiagonal QR method (without shifts) with high relative accuracy. Although Pietzsch method has the merit of accuracy, its disadvantage, as with other Jacobi-like methods, is speed, since algorithms of this class are known to be quite slow despite their quadratic convergence.

is the machine precision. Thus, eigenvalues of larger modulus are computed with high relative accuracy, while the smaller ones may not be relatively accurate at all. On the other hand, we can also reduce our matrix to tridiagonal skew-symmetric matrix and compute the eigenvalues by the bidiagonal QR method (without shifts) with high relative accuracy. Although Pietzsch method has the merit of accuracy, its disadvantage, as with other Jacobi-like methods, is speed, since algorithms of this class are known to be quite slow despite their quadratic convergence.

The algorithm can be split into two main parts: first we decompose a skew-symmetric matrix  by a Cholesky-like algorithm of Bunch into

by a Cholesky-like algorithm of Bunch into  , where

, where  is a lower-triangular and

is a lower-triangular and  is a block diagonal matrix consisting of

is a block diagonal matrix consisting of  blocks. In the second part, we orthogonalize columns of matrix

blocks. In the second part, we orthogonalize columns of matrix  using symplectic transformations.

using symplectic transformations.

In this talk, we describe implementation of the above-mentioned method on graphic processing units. While retaining the same accuracy, our implementation shows substantial speedup in comparison to sequential algorithm.

Martin Bečka (Slovak Academy of Sciences), Gabriel Okša (Slovak Academy of Sciences), Marian Vajteršic (University of Salzburg)

Fast and accurate one-sided Jacobi SVD dense routine (by Drmac and Veselic) is a part of LAPACK from version 3.2. Beside high accuracy, it offers performance comparable to xGESVD. Although this serial algorithm is not easily parallelizable, some of its ideas, together with a dynamic ordering of parallel tasks and a suitable blocking data strategy lead to the algorithm competitive to PxGESVD of ScaLAPACK.

The matrix is logically distributed by block columns, where columns of each block are part of one orthogonal basis of a subspace participating in the orthogonalization iteration process. The dynamic ordering for orthogonalization of subspaces significantly reduces the number of parallel iterations of the algorithm. When l denotes the number of subspaces, then l/2 of them can be orthogonalized in parallel on p=l/2 processors. Breaking this relation and choosing l = p/k, we come to a reduction of iteration steps, as well as to an improvement of scalability of the whole algorithm. Computational experiments show, that this one-sided block-Jacobi algorithm is comparable to PxGESVD; it runs even faster for smaller values of p.

Yusaku Yamamoto (The University of Electro-Communications), Lang Zhang, Shuhei Kudo

Eigenvalue problem of a real symmetric matrix,  , is an important problem that has applications in quantum chemistry, electronic structure calculation and statistical calculation. In this study, we deal with the problem of computing all the eigenvalues and eigenvectors of a symmetric matrix

, is an important problem that has applications in quantum chemistry, electronic structure calculation and statistical calculation. In this study, we deal with the problem of computing all the eigenvalues and eigenvectors of a symmetric matrix  on a shared-memory parallel computer.

on a shared-memory parallel computer.

The standard method for the symmetric eigenvalue problem is based on tri-diagonalziation of the coefficient matrix. In fact, LAPACK, which is one of the standard linear algebra libraries, also adopts this approach. However, the algorithm of tri-diagonalziation is complicated and its parallel granularity is small. Due to this, when solving a small-sized problem, the overhead of communication and synchronization between processors becomes dominant and the performance is saturated with a small number of processors.

In this talk, we focus on the block-Jacobi algorithm for solving the symmetric eigenvalue problem, which is a generalization of the well known Jacobi algorithm. It works on a matrix partitioned into small blocks and tries to diagonalize the matrix by eliminating the off-diagonal blocks by orthogonal transformations. Although this algorithm requires much more work than the tri-diagonalziation based algorithm, it has simpler computational patterns and larger parallel granularity. Thanks to these features, the algorithm is easier to optimize on modern high performance processors. Moreover, it is expected to attain better scalability for small-size problems because the overhead of inter-processor communication and synchronization is smaller.

Based on this idea, we developed an eigenvalue solver based on the block-Jacobi algorithm for a shared-memory parallel computer. In the previous parallel implementations of the block Jacobi method, the cyclic block-Jacobi method, which eliminates the off-diagonal blocks in a pre-determined order, has been mainly used. Though this method is easier to parallelize, its convergence is relatively slow. For this reason, we chose to use the classical block-Jacobi method, which eliminates the off-diagonal block with the largest norm at each step. This method generally converges faster than the cyclic block-Jacobi method. To parallelize this method, it is necessary to find the set of off-diagonal blocks that can be eliminated in parallel and whose sum of norms is as large as possible. We can find such a set by using the idea of maximum weight matching from graph theory. In our algorithm, we find a near-maximum weight matching by using a greedy algorithm. Based on a theoretical analysis, our method is shown to have excellent properties such as global convergence and locally quadratic convergence.

We implemented our algorithm using OpenMP and evaluated its performance on the Intel Xeon processor with 6 cores (12 threads). When solving an eigenvalue problem of a 3,000 by 3,000 matrix, the parallel speedup was 3.8 with 6 cores. When compared with the LAPACK, which uses the tri-diagonalization based algorithm, our algorithm was slower, but in terms of the parallel speedup, our algorithm was superior. Also, when the input matrix is close to diagonal, the convergence of our algorithm was improved. From these results, we expect that our algorithm can be superior to the tri-diagonalization based one when solving a number of eigenvalue problems whose coefficient matrices are slightly different from each other using a many-core processor.

Andreas Schäfer (University of Erlangen-Nuremberg), Dietmar Fey (University of Erlangen-Nuremberg)

We present an advanced latency hiding algorithm for tightly coupled, iterative numerical methods. As a first, it combines overlapping calculation and communication with wide halos which limit communication to every k-th step. This approach trades computational overhead for an improved decoupling of neighboring nodes. Thereby, it can effectively hide network latencies that exceed the compute time for a single time step. It is most beneficial for strong scaling setups and accelerator-equipped systems. We provide insights into how this algorithm can be expanded to improve fault resilience.

George Bosilca (University of Tennessee), Thomas Herault (University of Paris-Sud), Aurelien Bouteiller (University of Tennessee)

Time-to-completion and energetic costs are some of the units used to measure the impact of fault tolerance on production parallel applications. These costs have an extremely complex definition and involve many parameters. This talk will cover some of the most prominent parameters for traditional fault tolerance approaches, and describe a toolbox and it’s corresponding API. This extension to MPI is called User Level Failure Mitigation, and allows developers to design and implement the minimal and most efficient fault tolerance model needed by their parallel applications.

Sebastien Cayrols (INRIA), Laura Grigori (INRIA), Sophie Moufawad (INRIA)

In this talk we discuss a communication avoiding ILU0 preconditioner for solving large linear systems of equations by using iterative Krylov subspace methods. Our preconditioner allows to compute and apply the ILU0 preconditioner with no communication, through ghosting some of the input data and performing redundant computation. To avoid communication, an alternating reordering algorithm is introduced for structured and well partitioned unstructured matrices, that requires the input matrix to be ordered by using a graph partitioning technique such as k-way or nested dissection. We present performance results of CA-ILU0 and compare it to classical ILU0, Block Jacobi and RAS preconditioners.

Dominik Göddeke (Technical University of Dortmund), Mirco Altenbernd (Technical University of Dortmund), Dirk Ribbrock (Technical University of Dortmund)

Hierarchical multigrid methods are widely recognised as the only (general) class of algorithms to solve large, sparse systems of equations in an asymptotically optimal and thus algorithmically scalable way. For a wide range of problems, they form the key building block that dominates runtime, in particular in combination with numerically powerful implicit approaches for nonstationary phenomena and Newton-type schemes to treat nonlinearities.

In this talk, we analyse and discuss the numerical behaviour of geometric finite-element multigrid solvers in the presence of hardware failures and incorrect computation: Events like the loss of entire compute nodes, bitflips in memory, or silent data corruption in arithmetic units are widely believed to become the rule rather than the exception. This is due to permanently increasing node counts at scale, in combination with chip complexity, transistor density, and tight energy constraints.

We first analyse the impact of small- and large-scale failures and faults, i.e., partial loss of iterates or auxiliary arrays, on the convergence behaviour of finite-element multigrid algorithms numerically. We demonstrate that as long as the input data (matrix and right hand side) are not affected, multigrid always converges to the correct solution.

We then introduce a minimal checkpointing scheme based on the inherent data compression within the multilevel representation. This approach, in combination with partial recovery, almost maintains the convergence rates of the fault-free case even in the presence of frequent failures. We analyse the numerical and computational trade-off between the size of the checkpoint information and the performance and convergence of the solver. Special emphasis is given to the effect of discontinuous jumps in the solution that appear when components are eliminated, or restored from old intermediate data.

Simplice Donfack (Università della Svizzera italiana ), Stanimire Tomov (University of Tennessee), Jack Dongarra (University of Tennessee), Olaf Schenk (Università della Svizzera italiana)

We present an efficient hybrid CPU/GPU approach that is portable, dynamically and efficiently balances the workload between the CPUs and the GPUs, and avoids data transfer bottlenecks that are frequently present in numerical algorithms. Our approach determines the amount of initial work to assign to the CPUs before the execution, and then dynamically balances workloads during the execution. Then, we present a theoretical model to guide the choice of the initial amount of work for the CPUs. The validation of our model allows our approach to self-adapt on any architecture using the manufacturer's characteristics of the underlying machine. We illustrate our method for the LU factorization. For this case, we show that the use of our approach combined with a communication avoiding LU algorithm is efficient. For example, our experiments on a 24 cores AMD opteron 6172 show that by adding one GPU (Tesla S2050) we accelerate LU up to 2.4 X compared to the corresponding routine in MKL using 24 cores. The comparisons with MAGMA also show significant improvements.

Nicholas Knight (University of California, Berkeley), James Demmel (University of California, Berkeley), Grey Ballard (University of California, Berkeley), Nicholas Knight (University of California, Berkeley), Edgar Solomonik (University of California, Berkeley)

Communication, i.e., data movement, typically dominates the algorithmic costs of computing the eigenvalues and eigenvectors of large, dense symmetric matrices. This observation motivated multistep approaches for tridiagonalization, which perform more arithmetic operations than one-step approaches, but can asymptotically reduce communication.

We study multistep tridiagonalization on a distributed-memory parallel machine and propose several algorithmic enhancements that can asymptotically reduce communication compared to previous approaches. In the first step, when reducing a full matrix to band form, we apply the ideas of the tall-skinny QR algorithm and Householder reconstruction to minimize the number of interprocessor messages (along the critical path). In subsequent steps, when reducing a band matrix to one with narrower bandwidth, we extend Lang's one-step band tridiagonalization algorithm to a multistep approach, enabling a reduction in both messages and on-node data movement. For thicker bands, we propose a pipelined approach using a 2D block cyclic layout (compatible with ScaLAPACK's), where we agglomerate orthogonal updates across bulge chases; for thinner bands, we apply the idea of chasing multiple (consecutive) bulges, previously studied in shared-memory. In addition to asymptotically decreasing algorithmic costs over a wide range of problem sizes, when the input data is so large that its storage consumes all but a constant fraction of the machine's memory capacity, our approach attains known lower bounds on both the number of words moved between processors and on the number of messages in which these words are sent (along the critical path). Moreover, our approach also benefits the sequential case, asymptotically reducing the number of words and messages moved between levels of the memory hierarchy, and, again, attaining lower bounds. We benchmark and compare our approach with previous works like ELPA to evaluate whether minimizing communication improves overall performance.

We also consider the limitations of our approach and discuss possible extensions. First, the tradeoffs of multistep approaches are much greater when eigenvectors are computed, due to the cost of back-transformation, i.e., applying the orthogonal transformations generated by the tridiagonalization steps. Our approach shows, perhaps surprisingly, that it can be beneficial to perform band reduction steps redundantly, on different subsets of processors, in order to reduce communication involved with the back-transformation. In contrast to previous approaches, which perform only one or two tridiagonalization steps in order to minimize the back-transformation costs, our results show that it can be worthwhile to perform more steps, even when eigenvectors are computed. In practice, we navigate the back-transformation tradeoffs with performance modeling and parameter tuning. Second, if more than a constant fraction of memory is unused, other approaches are needed to exploit this memory to further reduce communication and attain the (smaller) lower bounds that apply; we mention our ongoing work for this scenario. Third, we show how our approach extends to many other applications of multistep band reduction, like Hermitian tridiagonalization, bidiagonalization, and Hessenberg reduction.

Sheng Fang (University of Oxford), Raphael Hauser (University of Oxford)

A novel algorithm for leading part SVD computations of large scale dense matrices is proposed. The algorithm is well adapted to the novel computational architectures and satisfies the loosely coupled requirements(low synchronicity and low communication overheads). In contrast to randomized approaches that sample a subset of the data matrix, our method looks at all the data and works well even in cases where the former algorithms fail. We will start with a geometric motivation of our approach and a discussion of how it differs from other approaches. The convergence analysis is split into the derivation of bounds on the local error occurring at individual nodes, and bounds on the global error accumulation. Several variants of the algorithm will be compared, and numerical experiments and applications in matrix optimization problems will also be discussed.

Ren Xiaoguang (National University of Defense Technology)

With the development of the electronic technology, the processors count in a supercomputer reaches million scale. However, the processes scale of a application is limited to several thousands, and the scalability face a bottleneck from several aspects, including I/O, communication, cache access, etc. In this paper, we focus on the communication bottleneck to the scalability of linear algebraic equation solving. We take preconditioned conjugate gradient (PCG) as an example, and analysis the feathers of the communication operations in the process of PCG solver. We find that reduce communication is the most critical issue for the scalability of the parallel iterative method for linear algebraic equation solve. We propose a local residual error optimization scheme to eliminate part of the reduce communication operations in the parallel iterative method, and improve the scalability of the parallel iterative method. Experimental results on the Tianhe-2 supercomputer demonstrate that our optimization scheme can achieve a much signally effect for the scalability of the linear algebraic equation solve.

Maya Neytcheva (Uppsala University), Ali Dorostkar (Uppsala University), Dimitar Lukarski (Uppsala University), Björn Lund (Uppsala University), Yvan Notay (Université libre de Bruxelles), Peter Schmidt (Uppsala University)

We consider large scale computer simulations of the so-called Glacial Isostatic Adjustment model, describing the submerge or elevation of the Earth surface as a response to redistribution of mass due to alternating glaciation and deglaciation periods.

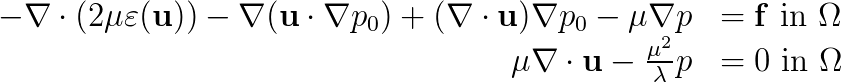

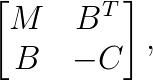

We consider a 2D model that is often used in the geophysics community, where self-gravitation is excluded. Due to the latter, the Earth is modelled as incompressible. The governing equations are  where the coefficients

where the coefficients  ,

,  are the Lamé coefficients,

are the Lamé coefficients,  is the strain tensor,

is the strain tensor,  is the displacement vector,

is the displacement vector,  is the so-called pre-stress,

is the so-called pre-stress,  is a body force and

is a body force and  is the so-called kinematic pressure, introduced in order to be able to simulate fully incompressible materials, for which

is the so-called kinematic pressure, introduced in order to be able to simulate fully incompressible materials, for which  .

.

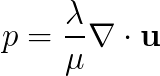

The model is discretized using the finite element method. It gives raise to algebraic systems of equations that are large, sparse, nonsymmetric and indefinite, and have the following saddle point structure,  where the pivot block

where the pivot block  is nonsymmetric.

is nonsymmetric.

These linear systems are solved using Krylov subspace iteration methods and a block preconditioner of block-triangular form, that requires inner-outer iterations. Algebraic multigrid is used as an inner solver. The efficiency of solving one system of the above type is crucial as it will be embedded in a time-evolution procedure, where systems with matrices with similar characteristics have to be solved repeatedly.

The simulations are performed using toolboxes from publicly available software packages - deal.ii, Trilinos, Paralution and AGMG. The numerical experiments are performed using OpenMP-type parallelism on multicore CPU systems as well as on GPU. We present performance results in terms of numerical and computational efficiency, number of iterations and execution time, and compare the timing results against a sparse direct solver from a commercial finite element package.

References:

deal.ii: http://www.dealii.org

Trilinos: http://trilinos.sandia.gov/

Paralution: http://www.paralution.com

AGMG: http://homepages.ulb.ac.be/ ynotay/AGMG/

Markus Blatt (HPC-Simulation-Software & Services)

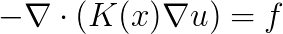

We present a parallel algebraic (AMG) multigrid method based on on-smoothed aggregation for preconditioning the elliptic problem  on bounded domains

on bounded domains  .

.

Our method is robust for highly variable or even discontinuous coefficients  . It is developed for massively parallel supercomputers with a low amount of main memory per core. Due to a greedy heuristic used for the coarsening the method has a low memory footprint and allows for scalable subsurface flow simulations with up to 150 billion unknowns on nearly 300 thousand cores.

. It is developed for massively parallel supercomputers with a low amount of main memory per core. Due to a greedy heuristic used for the coarsening the method has a low memory footprint and allows for scalable subsurface flow simulations with up to 150 billion unknowns on nearly 300 thousand cores.

The implementation is available as open source within the "Distributed and Unified Numerics Environment" (DUNE).

Seiji Fujino (Kyushu University), Chiaki Itou

We want to solve a linear system of equations as  , where

, where  is a sparse real

is a sparse real  matrix,

matrix,  and

and  are solution and right-hand side vectors of order

are solution and right-hand side vectors of order  , respectively. We consider to split the matrix

, respectively. We consider to split the matrix  as

as  , where

, where  ,

,  and

and  denote lower, upper triangular matrices and diagonal matrix, respectively. Moreover, we treat with preconditioned linear systems as

denote lower, upper triangular matrices and diagonal matrix, respectively. Moreover, we treat with preconditioned linear systems as  , where

, where  means a preconditioner matrix.

means a preconditioner matrix.

As well known, Eisenstat trick of Symmetric SOR is an efficient preconditioning. In this algorithm,  and

and  as splits of coefficient matrix

as splits of coefficient matrix  are computed in place of incomplete factorization of matrix

are computed in place of incomplete factorization of matrix  . Accordingly it does not need so much computational time in multiplication of preconditioner

. Accordingly it does not need so much computational time in multiplication of preconditioner  and matrix

and matrix  . However, it is not suited to parallel computation. In particular, preconditioning of Eisenstat SSOR is not executed efficiently on parallel computers with distributed memory.

. However, it is not suited to parallel computation. In particular, preconditioning of Eisenstat SSOR is not executed efficiently on parallel computers with distributed memory.

In our talk, we will propose Cache-Cache balancing technique for Eisenstat type of preconditioning on parallel computers with distributed memory. “ Cache-Cache” in French means “ hide and seek” in English. The nonzero entries of  once hidden for parallelism are found in computation of

once hidden for parallelism are found in computation of  for balancing the original matrix

for balancing the original matrix  . Through numerical experiments, we will make clear that the proposed Cache-Cache balancing technique for parallelism outperforms some conventional preconditionings.

. Through numerical experiments, we will make clear that the proposed Cache-Cache balancing technique for parallelism outperforms some conventional preconditionings.

Hartwig Anzt (University of Tennessee), Edmond Chow (Georgia Institute of Technology), Jack Dongarra (University of Tennessee)

Based on the premise that preconditioners needed for scientific computing are not only required to be robust in the numerical sense, but also scalable for up to thousands of light-weight cores, the recently proposed approach of computing an ILU factorization iteratively is a milestone in the concept of algorithms for Exascale computing. While ILU preconditioners, often providing appealing convergence improvement to iterative methods like Krylov subspace solvers, are among the most popular methods, their inherently sequential factorization is often daunting. Although improvement strategies like level-scheduling or multi-color ordering render some parallelism, the factorization often remains an expensive building block, especially when targeting large-scale systems. The iterative ILU computation offers an efficient workaround, as it allows for not only fine-grained parallelism, but also excessive asynchronism in the iterative component updates providing the algorithmic characteristics needed for future HPC systems: - The algorithm allows for scaling to any core count, while only the number of nonzero elements in the factorization sets an efficiency-bound. - Communication latencies are of minor impact, and hierarchical distribution of the data allows for reduction of the communication volume. - The asynchronism implies algorithmic error-resilience, as hardware-failures as well as soft errors neither result in the breakdown, nor in the need for restart. This intrinsic fault-tolerance also removes the need for checkpointing strategies or error-resilient communication, both detrimental to performance. At the same time, the operation characteristics of streaming processors like GPUs promote inherently asynchronous algorithms. While implementing linear algebra routines usually requires careful synchronization, the tolerance for asynchronism makes the iterative ILU factorization an ideal candidate for efficient usage of the computing power provided by GPUs. The increasing number of high-performance computing platforms featuring coprocessors like GPUs results in a strong demand for suitable algorithms. In this paper we review the iterative approach of computing the ILU factorization, and the potential of its GPU implementations. We evaluate different approaches that result in either totally asynchronous iteration, or algorithms in which subsets have a pre-defined update order, with iterating asynchronously between the subsets. For those block-asynchronous strategies, the convergence is obviously ensured as they arise as a special case of asynchronous iteration, their performance might benefit from appealing cache reusage on the GPUs. In this case, the granularity of the asynchronism is sallied to a block level, but in contrast to classical block-asynchronous methods, where blocks are usually formed out of adjacent vector components, this approach blocks matrix entries that are adjacent in memory, but not necessarily in the matrix. We compare the performance characteristics of the implementations featuring different asynchronism granularity for several matrix storage formats and matrix sparsity pattern. We also investigate the impact of the asynchronism. Using the shift representing the age of the oldest component used in an update as a control parameter we analyze the trade-off between performance and convergence rate. Careful inspection reveals that the performance-optimal choice of the shift depends primarily on the hardware characteristics, while the properties of the matrix have a minor impact. Although the potential of the asynchronous ILU computation primarily pays off when targeting large-scale problems, we also include a brief comparison against direct ILU factorizations for problems of moderate size.

Lukasz Szustak (Częstochowa University of Technology), Krzysztof Rojek (Częstochowa University of Technology), Roman Wyrzykowski (Czestochowa University of Technology), Pawel Gepner (Intel Corporation)

The multidimensional positive definite advection transport algorithm (MPDATA) belongs to the group of nonoscillatory forward in time algorithms, and performs a sequence of stencil computations. MPDATA is one of the major parts of the dynamic core of the EULAG geophysical model.

The Intel Xeon Phi coprocessor is the first product based on the Intel Many Integrated Core (Intel MIC) architecture. This architecture offers notable performance advantages over traditional processors, and supports practically the same traditional parallel programming model. Intel MIC architecture combines more than 50 cores (200 threads) within a single chip, it has at least 25 MB aggregated L2 cache and 6 GB of on-board memory. The Intel Phi coprocessor is delivered in form factor of a PCIe device.

In this work, we outline an approach to adaptation of the 3D MPDATA algorithm to the Intel MIC architecture. This approach is based on combination of loop tiling and loop fusion techniques, and allows us to ease memory and communication bounds and better exploit the theoretical floating point efficiency of target computing platforms. The proposed approach is than extended on clusters with Intel Xeon Phi coprocessors, using a mixture of MPI and OpenMP programming standards. For this aim, we use a hierarchical decomposition of MPDATA, including level of cluster as well as distribution of computation across cores of Intel Xeon Phi. We discuss preliminary performance results achieved for cluster with Intel Xeon Phi 5120D.

Yves Ineichen (IBM Research - Zurich), Costas Bekas (IBM Research - Zurich), Alessandro Curioni (IBM Research - Zurich)

Over the years graph analytics has become a cornerstone for a wide variety of research areas and applications, and a respectable number of algorithms have been developed to extract knowledge from big and complex graphs. Unfortunately, the high complexity of these algorithms poses a severe restriction on the problem sizes. Indeed, in modern applications such as social or bio inspired networks, the demand to analyze larger and larger graphs now routinely range in the tens of millions and out-reaching to the billions in notable cases.

We believe that in the era of the big data regime accuracy has to be traded for algorithmic complexity and scalability to drive big data analytics. With this goal in mind we developed novel near linear ( ) methods for graph analytics. In this talk we will focus on two algorithms: estimating subgraph centralities (measure for the importance of a node), and spectrograms (density of eigenvalues) of the adjacency matrix of the graph. On one hand we discuss the underlaying mathematical models relaying on matrix trace estimation techniques. On the other hand we discuss the exhibited parallelism on multiple levels in order to attain good parallel efficiency on a large number of processes. To that end we explore the scalability of the proposed algorithms with benchmarks on huge datasets, e.g. the graph of the European street network containing 51 million nodes and 108 million non-zeros. Due to the iterative behavior of the methods, a very good estimate can be computed in a matter of seconds.

) methods for graph analytics. In this talk we will focus on two algorithms: estimating subgraph centralities (measure for the importance of a node), and spectrograms (density of eigenvalues) of the adjacency matrix of the graph. On one hand we discuss the underlaying mathematical models relaying on matrix trace estimation techniques. On the other hand we discuss the exhibited parallelism on multiple levels in order to attain good parallel efficiency on a large number of processes. To that end we explore the scalability of the proposed algorithms with benchmarks on huge datasets, e.g. the graph of the European street network containing 51 million nodes and 108 million non-zeros. Due to the iterative behavior of the methods, a very good estimate can be computed in a matter of seconds.

Both analytics have an immediate impact on visualizing and exploring large graphs arising in many fields.

Vaclav Hapla (Technical University of Ostrava), Martin Cermak (Technical University of Ostrava), David Horak (Technical University of Ostrava), Alexandros Markopoulos (Technical University of Ostrava), Lubomir Riha (Technical University of Ostrava)

Discretization of most engineering problems, described by partial differential equations, leads to large sparse linear systems of equations. However, problems that involve variational inequalities, such as those describing the equilibrium of elastic bodies in mutual contact, can be more naturally expressed as quadratic programming problems (QPs). A QP takes the form

where

where  is a quadratic function of the form

is a quadratic function of the form  and

and  is a feasible set containg only

is a feasible set containg only  satisfying zero or more prescribed constraints of any of the following forms

\begin{align}

B_Ex &= c_E,

satisfying zero or more prescribed constraints of any of the following forms

\begin{align}

B_Ex &= c_E,

B_Ix &\leq c_I,

l \leq x &\leq u,

\end{align}

where

is the symmetric positive semidefinite Hessian matrix,

is the symmetric positive semidefinite Hessian matrix,

is the right hand side vector,

is the right hand side vector,

is the equality constraint matrix,

is the equality constraint matrix,

is the equality constraint right hand side vector,

is the equality constraint right hand side vector,

is the inequality constraint matrix,

is the inequality constraint matrix,

is the inequality constraint right hand side vector,

is the inequality constraint right hand side vector,

is the lower bound vector,

and

is the lower bound vector,

and

is the upper bound vector.

is the upper bound vector.

We shall introduce here our emerging software stack PERMON. It makes use of theoretical results in advanced quadratic programming algorithms, discretization and domain decomposition methods, and is built on top of the respected PETSc framework for scalable scientific computing, providing parallel matrix algorithms and linear system solvers. The core solver layer of PERMON has arised from splitting the former FLLOP (FETI Light Layer on Top of PETSc) package into several modules: PermonDDM (aka FLLOP) library module for (the algebraical part of) domain decomposition methods (primarily those from FETI family), PermonQP library module for quadratic programming, and PermonAIF which is a pure C array based wrapper of PermonDDM and PermonQP useful for users wanting to take advantage of Permon in their software but do not understand/want/need object-oriented PETSc API. Other layer include application-specific solver modules such as PermonPlasticity, discretization tools such as PermonCube, interfaces allowing calling FLLOP from external discretization software such as libMesh or Elmer. We will present a snapshot of the current state and an outlook for near future.

Alberto Garcia-Robledo (CINVESTAV), Arturo Diaz-Perez (CINVESTAV), Guillermo Morales-Luna (CINVESTAV)

There exists a growing interest in the reconstruction of a variety of technological, sociological and biological phenomena using complex networks. The emerging “science of networks” has motivated a renewed interest in classical graph problems such as the efficient computing of shortest paths and breadth-first searches (BFS). These algorithms are the building blocks for a new set of applications related to the analysis of the structure of massive real-world networks.

All-Sources BFS (AS-BFS) is a highly recurrent kernel in complex network applications. It consists of performing as many full BFS traversals as the number of vertices of the network, each BFS starting from a different source vertex. AS-BFS is the core of wide variety of global and centrality metrics that allow us to evaluate global aspects of the topology of huge networks and to determine the importance of a particular vertex.

The massive size of the phenomena that complex networks model introduce large amounts of processing times that might be alleviated by exploiting modern hardware accelerators, such as GPUs and multicores. However, existing results accelerating BFS-related problems reveal the existing gap between complex network algorithms and current parallel architectures.

There is an useful duality between fundamental operations of linear algebra and graph traversals. For example, in [Qian et al. 2012] it is proposed a GPU approach that overcome the irregular data access patterns on graphs from Electronic Design Automation (EDA) applications by moving BFS to the realm of linear algebra using sparse matrix-vector multiplications (SpMVs). Sparse matrix operations provide a rich set of data-level parallelism, high arithmetic loads and clear data-access patterns, suggesting that an algebraic formulation of BFS can take advantage of the GPU data-parallel architecture.

Unfortunately, there is neither a systematic study on the algebraic BFS approach on non-EDA networks nor a study that compares the algebraic and visitor strategies. Is the algebraic approach adequate for accelerating measurements on complex networks? How they compare on graphs with different structure?

In this paper, we develop an experimental study of the algebraic AS-BFS on GPUs and multicores, by methodologically comparing the visitation and the algebraic approaches on complex networks. We experimented on a variety of synthetic regular, random, and scale-free networks, in order to reveal the impact of the network topology on the GPU and multicore performance and show the suitability of the algebraic AS-BFS for these architectures.

We experimented with sparse and dense graphs with varying diameter, degree distribution and edge density. In order to create graphs with the desired structure, we made use of the well-known Gilbert and Barabási-Albert random graph models, which create uncorrelated random graphs with Poisson and scale-free degree distribution, respectively.

We found that structural properties present in complex networks influence the performance of AS-BFS strategies in the following (decreasing) order of importance: (1) whether the graph is regular or non-regular, (2) whether the graph is scale-free or not, (3) and the graph density. We also found that even though the SpMV approach is not well suited for multicores, the GPU SpMV strategy can outperform the GPU visitor strategy on low-diameter graphs.

In addition, we show that CPUs and GPUs are suitable for complementary kinds of graphs, being GPUs better suited for random sparse graphs. The presented empirical results might be useful for the design of an hybrid network processing strategy that considers how the different algorithmic BFS approaches perform differently on networks with different structure.

Eric Darve (Stanford University)

In recent years there has been a resurgence in direct methods to solve linear systems. These methods can have many advantages compared to iterative solvers; in particular their accuracy and performance is less sensitive to the distribution of eigenvalues. However, they typically have a larger computational cost in cases where iterative solvers converge in few iterations. We will discuss a recent trend of methods that address this cost and can make these direct solvers competitive. Techniques involved include hierarchical matrices, hierarchically semi-separable matrices, fast multipole method, etc.

Wim Vanroose (University of Antwerp)

The main algorithmic components of a preconditioned Krylov method are the dot-product and the sparse-matrix vector product. On modern HPC hardware the performance of Preconditioned Krylov methods is severely limited due to two communication bottlenecks. First, each dot product has a large latency due to the involved synchronization and global reduction. Second, each sparse-matrix vector product suffers from the limited memory bandwidth because it does only a few floating point computations for each byte read from main memory. In this talk we discuss how Krylov methods can be redesigned to alleviate these two communication bottlenecks.

Eike Mueller (University of Bath), Robert Scheichl (University of Bath)

The continuous increase in model resolution in all areas of geophysical modeling requires the development of efficient and highly scalable solvers for very large elliptic PDEs. For example, implicit time stepping methods in weather and climate prediction require the solution of an equation for the atmospheric pressure correction at every time step and this is one of the computational bottlenecks of the model. The discretized linear system has around  unknowns if the horizontal resolution is increased to around 1 kilometer in a global model run and weather forecasts can only be produced at operational time scales at this resolution if the PDE can be solved in a fraction of a second. Only solvers which are algorithmically scalable and can be efficiently parallelised on

unknowns if the horizontal resolution is increased to around 1 kilometer in a global model run and weather forecasts can only be produced at operational time scales at this resolution if the PDE can be solved in a fraction of a second. Only solvers which are algorithmically scalable and can be efficiently parallelised on  processor cores can deliver this performance. As the equation is solved in a thin spherical shell representing the earth's atmosphere, the solver has to be able to handle very strong vertical anisotropies; equations with a similar structure arise in many other areas in the earth sciences such as global ocean models, subsurface flow simulations and ice sheet models.

processor cores can deliver this performance. As the equation is solved in a thin spherical shell representing the earth's atmosphere, the solver has to be able to handle very strong vertical anisotropies; equations with a similar structure arise in many other areas in the earth sciences such as global ocean models, subsurface flow simulations and ice sheet models.

We compare the algorithmic performance and massively parallel scalability of different Krylov-subspace and multigrid solvers for the pressure correction equation in semi-implicit semi-Lagrangian time stepping for atmospheric models. We studied both general purpose AMG solvers from the DUNE (Heidelberg) and hypre (Lawrence Livermore Lab) libraries and developed a bespoke geometric multigrid preconditioner tailored to the geometry and anisotropic structure of the equation.